Text as a Richer Source of Supervision in Semantic Segmentation Tasks

Jan 1, 2023· ,,,·

0 min read

,,,·

0 min read

Valérie Zermatten

Javiera Castillo Navarro

Lloyd Hughes

Tobias Kellenberger

Devis Tuia

Abstract

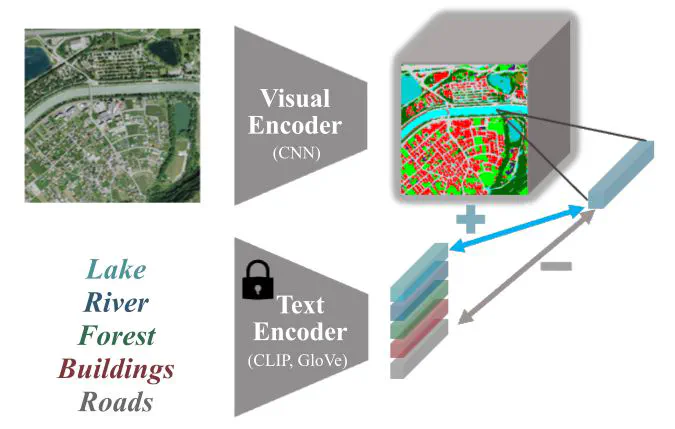

This paper introduces TACOSS a text-image alignment approach that allows explainable land cover semantic segmentation by directly integrating semantic concepts encoded from texts. TACOSS combines convolutional neural networks for visual feature extraction with semantic embeddings provided by a language model. By leveraging contrastive learning approaches, we learn an alignment between the visual and the (fixed) textual representations. In addition to producing standard semantic segmentation outputs, our model enables interactive queries with RS images using natural language prompts. The experimental results obtained on 50cm resolution aerial data from Switzerland show that TACOSS performs similarly to a standard semantic segmentation model while allowing the flexible usage of in- and out-of-vocabulary terms for the interactions with the image.

Type

Publication

IEEE International Geoscience and Remote Sensing Symposium (IGARSS)