Knowledge-Aware Visual Question Generation for Remote Sensing Images

Abstract

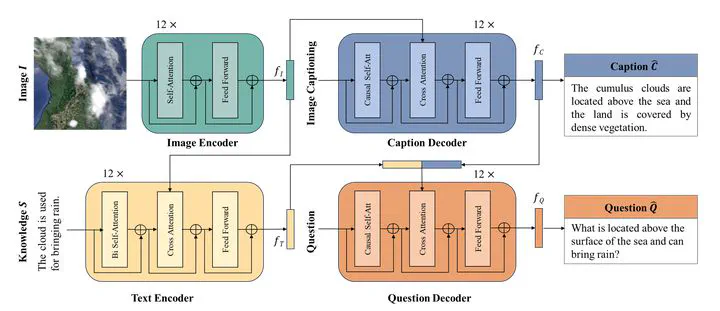

With the rapid development of remote sensing image archives, asking questions about images has become an effective way of gathering specific information or performing image retrieval. However, automatically generated image-based questions tend to be simplistic and template-based, which hinders the real deployment of question answering or visual dialogue systems. To enrich and diversify the questions, we propose a knowledge-aware remote sensing visual question generation model, KRSVQG, that incorporates external knowledge related to the image content to improve the quality and contextual understanding of the generated questions. The model takes an image and a related knowledge triplet from external knowledge sources as inputs and leverages image captioning as an intermediary representation to enhance the image grounding of the generated questions. To assess the performance of KRSVQG, we utilized two datasets that we manually annotated: NWPU-300 and TextRS-300. Results on these two datasets demonstrate that KRSVQG outperforms existing methods and leads to knowledge-enriched questions, grounded in both image and domain knowledge.

Type

Publication

IEEE International Geoscience and Remote Sensing Symposium